Usability of a Mobile Application for Patients Rehabilitating in their Community

Usability of a Mobile Application for Patients Rehabilitating in their Community

Jeanette R. Little, BS, MS1, Holly H. Pavliscsak, BS, MHSA2, Mabel Cooper, RN, BSN, CCRC3, Lois Goldstein, RN, BSN3, James Tong, BS, MBA4, Stephanie J. Fonda, PhD5

1Mobile Health Innovation Center, Telemedicine and Advanced Technology Research Center, United States Army Medical Research and Materiel Command; 2The Geneva Foundation, Assigned to the Mobile Health Care Innovation Center (MHIC), Telemedicine and Advanced Technology Research Center (TATRC), United States Army Medical Research and Materiel Command (USAMRMC); 3The Geneva Foundation, Assigned to the Mobile Health Care Innovation Center (MHIC), Telemedicine and Advanced Technology Research Center (TATRC); 4IMS Health, Government Solutions; 5The Geneva Foundation, Assigned to Telemedicine and Advanced Technology Research Center (TATRC)

Corresponding Author: jeanette.r.little.civ@mail.mil

Journal MTM 6:3:14–24, 2017

Background: A potential factor in adherence to use of mobile technologies is usability, yet this is rarely examined.

Aims: This article examines the usability of a mobile application (“mCare”), provided to support injured Service Members rehabilitating in their communities, and assesses how usability ratings related to the users’ background characteristics and usage of mCare.

Methods: Data were from the intervention arm (n=95) of a 36-week, randomized controlled trial. Usability was measured with the System Usability Scale (SUS) (n=41) and semi-structured interviews (n=49). The analysis compared SUS scores by cohort characteristics, with t-tests and ANOVA. Interview responses were coded for valence. Pearson correlations quantified the association between SUS scores and usage.

Results: Mean total SUS score was 78.0 [standard deviation (SD) = 21.1; ‘A’], usability sub-score was 76.7 (SD = 22.9; ‘A’), and learnability sub-score was 82.0 (SD = 25.0; ‘A+’). SUS scores differed by mobile device type (p = 0.07) and living arrangement (p = 0.06). Participants with behavioral health problems, of older age, were Warrant Officers, and/or had a specific wireless carrier rated mCare lower, though these findings were not statistically significant. Interview responses provided insight into why SUS scores might have been lower in some cases. Higher usage was associated with higher total SUS scores (r = 0.46, p = 0.004).

Conclusion: Overall, mCare was rated favorably. Higher usage was correlated with higher usability scores. Providers planning to use mCare with patients should consider patient’s characteristics (e.g., type of mobile phone, living arrangement), as these could affect user experience.

Keywords: Mobile health, Telemedicine, Technology assessment, Utilization, Patient care management

Introduction

In 2008, the United States Army Medical Department, through the Telemedicine and Advanced Technology Research Center (TATRC), developed and tested ‘mCare’, a secure mobile application for providing care management to wounded men and women in the military who were rehabilitating in their communities. The mCare application facilitates and enhances contact between the person rehabilitating in the community and their case managers and other care team members. It sends to the user’s phone: appointment reminders; health and wellness tips; community resources and administrative announcements; and questionnaires on various aspects of the user’s well-being. It can also transmit the user’s responses to questionnaires back to their care team. The mCare application was developed to support Service Members with mild traumatic brain injury (mTBI) or post-traumatic stress (PTS) rehabilitating in the community, with the goal of eventually expanding the application to support other care management use cases.

To assess the impact of mCare on users’ quality of life and adherence to appointments and rehabilitation goals, the TATRC team conducted a randomized controlled trial (RCT) in which participants were recruited and allocated to receive standard care management or standard care management plus mCare. As part of that trial, the TATRC team assessed usability of the mCare application, as perceived among those randomized to receive it. This article reports on the perceived usability of the application overall and how it relates to important characteristics of the mCare cohort, namely behavioral health, mTBI and PTS status, technology (e.g., type of phone), environment (i.e., population size of the participants’ community), and usage of the application. Although the TATRC team did not apply an iterative user-centered design model per se,2 and this is not a usability test performed in a laboratory environment for the purpose of finding problems,3,4 post-study usability evaluations such as this one provide important information on the translation and sustainment of new technologies into use outside of the scope of a study, from the perspective of users who have become ‘experts’ on the technology over time.

Methods

mCare application

The mCare application has been previously described.1 In brief, it is a secure, bi-directional, application connected to a backend system that facilitates contact between Service Members and their care team members. The mCare application is remotely activated on a user’s mobile device. The system uses text messages to notify users when there is new information to view and respond to within the application. This new information includes daily questionnaires, appointment reminders, health tips, and administrative information. The backend system also provides a secure website that tracks all data from the mobile devices and generates escalation triggers (as needed) to the care team. To access the new information, the user has to enter their mCare personal identification number, which is part of a secure two-factor authentication process that makes mCare unique from most mobile health applications.

For the RCT, mCare sent a daily questionnaire, three health and wellness tips per week, administrative announcements, and appointment reminders 24 hours before, 90 minutes before, and 24 hours after each clinical encounter. The questionnaires covered one functional topic area per day (General Status, Pain Status, Energy and Sleep Status, Anger Management, Relationship Status, Transition Goal Status, and Mood Status) and each functional topic was repeated weekly. The mCare application also sent a Weight Status questionnaire once per month. The mCare questionnaires can be modified for other populations, as appropriate, making it a generalizable adjunct to standard care management for a variety of conditions.

Study Design

Injured Service Members newly assigned to four participating Community-Based Warrior Transition Units (CBWTUs) (now called Community Care Units [CCUs]) were recruited to participate voluntarily in the RCT testing the efficacy and usability of mCare (n=182). Among other things, eligibility criteria included having a cell phone, proficiency in the use of that cell phone, and demonstrated ability to send and receive text messages. The data for this analysis are from the intervention arm (n=95). Henceforth, this article refers to participants in the intervention arm only.

The purpose of the CBTWUs was to help Service Members rehabilitate in their home communities and return to duty. If a participant’s assignment to one of the four participating CBWTUs ended (referred to as ‘out processed’), their time in the study concluded early, because being at a CBWTU was a criterion for study participation. In this study, Service Members left their CBWTU for the following reasons: ‘Release from Active Duty’ (n=28); ‘Separation’ (n=1); or returned to a Military Treatment Facility (MTF) (n=3). Release from Active Duty applied to soldiers who were National Guard and Reservists and indicated that they were going back to their respective units and returned to their reserve status, whatever that was. Separation referred to when a soldier separated from the service because their pre-established contract of service was complete and they had less than 20 years of service.

Once enrolled, participants in the intervention arm were given standard care management plus the mCare application, with all of its aforementioned features, and followed for up to 36 weeks. As part of standard care management, the CBWTU case managers contacted rehabilitating Service Members weekly, via telephone, to schedule appointments with medical specialists (which were individualized, according to the Service Member’s needs), monitor appointment attendance, assess progress toward therapy goals, and provide emotional support. Also as part of standard care management, Platoon Sergeants contacted the Service Members daily, by telephone, to monitor and address any logistical obstacles the Service Member might have encountered and consulted with other CBWTU staff members as needed.

In addition to the daily mCare questionnaires, participants completed study-related questionnaires about their well-being (i.e., quality of life, symptoms) and goals at baseline and every four weeks until the study ended or they out processed. At the end of their participation, participants were also asked to complete the System Usability Scale (SUS). Study-related surveys were administered through the online system called Survey Monkey®.

Additionally, participants were approached to complete semi-structured interviews about the usability of mCare. The interviewees were kept anonymous to the investigators by de-identifying the data in the report.

Measures

Usability. The study examined participants’ perceptions of usability with the SUS and semi-structured interviews.

The SUS was developed in 1986 and is a recognized industry standard.5 It is technology independent, has been widely used in health and medical research to assess the usability of hardware,6 consumer software,7 websites,8 and cell phone technologies,9 and provides consistent reliability even with small sample sizes.10 The SUS has 10 questions, with responses ranging from strongly disagree to strongly agree (coded from 1 to 5), and reversed coded as needed so that all items had consistent scoring. The ten numerical scores are summed and multiplied by 2.5 to obtain the overall SUS value, ranging from 0 to 100. In addition to the overall SUS score, the SUS has two sub-scores: usability and learnability.11,12 Learnability is defined as a component of usability, and is “the degree to which a product or system can be used by specified users to achieve specified goals of learning to use the product or system with effectiveness, efficiency, freedom from risk and satisfaction in a specified context of use is the ease of which a user can learn how to software tools” (ISO/IEC 25010:2011, Section 4.2.4.2). The overall SUS score, usability sub-score, and learnability sub-score can be used to determine a percentile ranking and a corresponding conventional letter grade (with the values ‘A’, ‘B’, ‘C’, ‘D’, ‘F’), based on published baseline data for cell phone technologies.13 The letter grade indicates how usable mCare is scored relative to other cell-phone based tools and is straightforward to interpret.

The semi-structured interviews were conducted by the Sister Kenny Research Center (SKRC). The interviews took place over the telephone and were 10-15 minutes in duration. SKRC transcribed the interviews and analyzed the transcripts using qualitative analysis techniques. To assure quality of analysis, three team members coded three interviews from mCare, compared their results, discussed differences, and reached consensus on rule-based coding criteria. Valence was assigned to each response when applicable, representing the overall tone (i.e., positive vs. negative).

Behavioral Health, mTBI and PTS Status. Due to the high rates of these problems among Service Members returning from Iraq and Afghanistan, the study sought to determine whether having a behavioral health problem, mTBI, or PTS was related to perceptions of usability. In this cohort, the most common behavioral health problems are depression and anxiety. We grouped participants as follows: a) no behavioral health problem, mTBI, or PTS; b) behavioral health problem(s) only; c) behavioral health problem(s) plus mTBI and/or PTS; and d) mTBI and/or PTS only. Because mTBI is not a behavioral health diagnosis and many of its symptoms are similar to those for PTS, mTBI and PTS were grouped together.

Technological and Environmental Characteristics. The study collected data on several technology and environmental factors that could potentially affect the participants’ experience of mCare, namely the type of phone model they had (iPhone, Android, other), wireless carrier/service provider (AT&T, Sprint, T-Mobile, Verizon), and population size of the town in which the participant lived. For population size, the analysis grouped participant according to whether their town was a) at or below the median population size for the sample or b) above the median population size for the sample. Population size was considered a proxy for wireless service coverage.

Usage Measures. The mCare system automatically collects data on the date and time of when a questionnaire or an appointment reminder was sent successfully, if/when it synced with a participant’s mobile phone, and if/when the participant responded. Using this information, the study team created five measures to characterize usage: 1) the total number of questionnaires successfully sent to the participant; 2) the total number of appointment reminders successfully sent to the participant; 3) the percentage of questionnaires that synced with the participant’s mobile phone; 4) the percentage of appointment reminders that synced with the participant’s mobile phone; and 5) the percentage of successfully synced daily mCare questionnaires that the participant responded to. A ‘sync’ occurred when the user logged into the mCare application, which initiated an automatic information exchange that made current content available on the user’s phone. Responding to the questionnaires required more effort on the part of the participant than other features of mCare.

Other Background Characteristics. The analysis included age (years, >/= 18), gender, marital status (single, married, divorced), living arrangement (alone, with a spouse or partner, or ‘other’, meaning with friends or family), military rank (Enlisted, Warrant Officer, Officer), CBWTU site (in Alabama [AL], Virginia [VA], Illinois [IL], or Florida [FL]), and number of weeks enrolled in the study. Educational attainment was not collected in this study; however, a minimum of a high school diploma is required to enlist in the United States military.

Analysis

First, the analysis summarized the background, technological, environmental and usage characteristics of the sample and tested for differences between participants who completed the SUS and those who did not, using t-tests and Fisher’s Exact Test. Second, the analysis calculated the total SUS score and the usability and learnability sub-scores for the study participants overall (of those who responded to the SUS) and then by key participant characteristics. Background characteristics that differed between those who completed the SUS and those who did not were included in the usability assessment as well. The analyses tested for group differences in SUS scores using Analysis of Variance (ANOVA) or t-tests. Third, from the semi-structured interviews, the analysis examined the frequency (and percentage) of the valence (i.e., positive, negative, neutral) of each question pertinent to general mCare usage. Fourth, the analysis calculated Pearson correlations coefficients to determine the bivariate associations between usability and usage.

Ethics Statement

The study protocol was reviewed by the Institutional Review Board in the Department of Clinical Investigation at Walter Reed National Military Medical Center.

Results

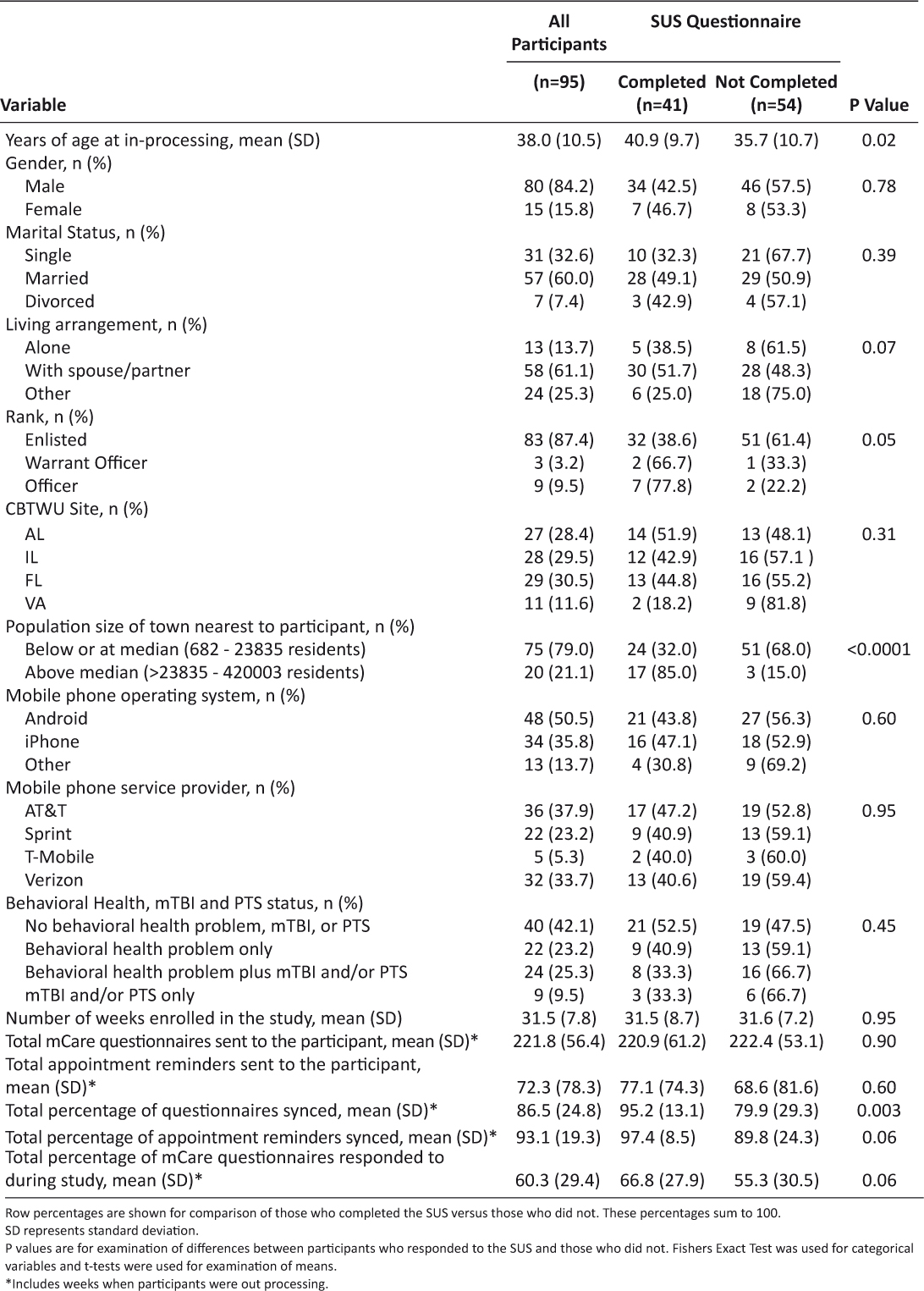

The average age of the study participants in the mCare arm at time of enrollment was 38 years (Table 1). Most participants were male (84.2%), married (60%), living with a spouse or partner (61.1%), and had the rank of ‘Enlisted’ (87.4%). Almost 51% of participants had Android phones, and 35.8% had iPhones. AT&T was the more widely used service provider (37.9%), followed by Verizon (33.3%). The population size of the towns participants resided in ranged from 682 to 420,003 residents. In the intervention arm, 79% lived in or near towns below the median population size. Forty-two percent had no behavioral health problems, TBI, or PTS, 23.2% had a behavioral health problem only, and the remainder had mTBI and/or PTS with or without a behavioral health diagnosis also. Participants synced/retrieved 86.5% and 93.1% of daily questionnaires and appointment reminders sent during the active intervention, respectively, and responded to 60.3% of the questionnaires synced.

Table 1: Characteristics of the mCare Participants, Overall and Whether They Completed the SUS Questionnaire

Forty-one (43.2%) of the mCare participants completed the SUS. Those who completed the SUS differed from those who did not in that they were older (p = 0.02), synced/retrieved more appointment reminders (p = 0.06), and responded to more questionnaires (p = 0.003) on average. Also, higher percentages of participants who lived with a spouse/partner (p = 0.07), were of higher rank (p = 0.05), and lived in more populated towns (p < 0.0001) completed the SUS than did not.

The mCare application received high marks for the total SUS score. Mean total SUS score was 78 [standard deviation (SD) = 21.2], for a letter grade of ‘A’, mean usability sub-score was 76.7 (SD = 22.9), for a letter grade of ‘A’, and mean learnability sub-score was 82.0 (SD = 25.0), for a letter grade of ‘A+’ (Table 2). Participants with a behavioral health problem only gave mCare lower marks (‘C+’ for both total score and usability sub-score, and ‘B-’ for learnability sub-score), whereasparticipants with no behavioral health problem, mTBI, or PTS gave mCare an ‘A+’ for all domains. Although seemingly large, the differences in SUS scores by behavioral health, mTBI or PTS status were not statistically significant. Similarly, differences in SUS scores appeared to differ across the age groups and by rank, but the differences were not statistically significant. Participants living with a spouse or partner gave mCare ratings of ‘A+’ across all domains, whereas participants in ‘other’ living arrangements gave mCare a ‘C’ for the total scale and a ‘D’ for the usability sub-score (p = 0.06).

Table 2: SUS Scores by Selected Participant Characteristics

With respect to technology and environmental measures, participants who used wireless carriers other than AT&T rated mCare as an ‘A-’ or better on all domains. Participants with AT&T gave mCare a ‘B+’ for total the total score, a ‘B’ for the usability sub-score, and an ‘A+’ for learnability. For phone type, mCare again received letter grades of ‘A’ or better, except from iPhone users, who gave mCare a ‘B’ for total score and ‘C+’ for usability sub-score (p = 0.07).

Forty-nine of the 95 (51.6%) mCare participants completed semi-structured interviews. For all questions, the percentage of positive responses was greater than the percentage of negative and neutral responses combined. The question with the most favorable response (91.8% positive) was about how mCare facilitated communication with the care team (Table 3). The questions with the least favorable responses were about reporting pain (22.4% negative or neutral, with 85.7% of interviewees saying pain had been a concern during the study) and the Appointments/Reminder feature (22.4% negative or neutral). In response to the question about communication, one interviewee said: “I think it was a good program actually, the way you can put everything in your phone… One thing I didn’t like, the program is a little slow. If you want to load up five or six appointments at a time because you have a lot of appointments, it always seemed like it took a lot….” For the pain question, the greater negative/neutral responses were more about usefulness than usability per se. One interviewee said, “If I told you that at noon my pain was three and then at one pm it was seven and then at five pm it went back to three…. and it was awful when I woke up, what would you do about it?”

Table 3: Valence Ratings of mCare Participants’ Responses to Usability Questions in Semi-Structured Interviews

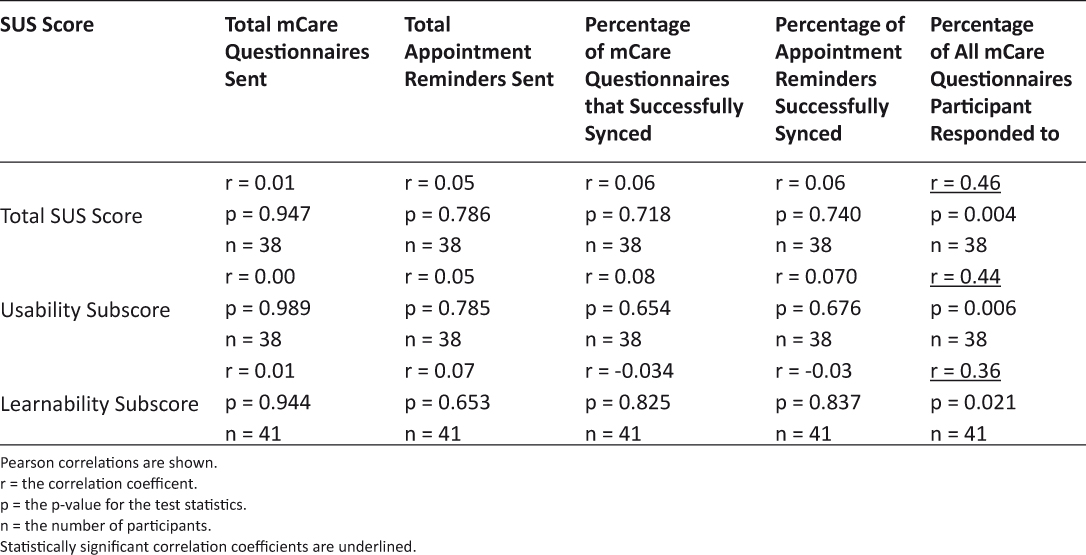

The correlation coefficients show that completion of more of the daily questionnaires was associated with higher total SUS scores (r = 0.46, p = 0.004), higher usability sub-scores (r = 0.44, p = 0.006), and higher learnability sub-scores (r = 0.36, p = 0.021) (Table 4).

Table 4: Correlations among Usability and Usage Measures

Discussion

Overall, results from the SUS and semi-structured interviews indicate that users generally rated the mCare application favorably. The heterogeneity of the cohort served in the mCare RCT warranted analysis of whether and how certain social groups rated the mCare application. This type of analysis is about the ‘fit’ between a person and the technology, and can help guide a provider in determining who would benefit from using a particular technology to support their self-care.

Previous research shows that information technologies, such as the Internet, phones, and social media, can improve patient adherence, information exchange between providers and patients, and outcomes.14 With respect to mobile phones specifically, one meta-analysis of 16 studies showed that mobile phone text messaging led to a significant increase in medication adherence,15 and a systematic review of seven interventions for cardiovascular disease management found mobile phone applications effective in increasing medication adherence and physical activity.16 Likewise, a pilot study of mCare found an increase in adherence to health care appointments.17

Many health care providers recognize that, despite how necessary self-care is, it is not necessarily the highest priority of their patients, and/or patients have competing priorities. The mCare team hypothesized that a more satisfying user experience would lead to consistent and sustained usage. Yet Sawesi and colleagues’ systematic review found that only about one-third of the 170 studies examined addressed usability.14 The results of this analysis support that hypothesis, showing that higher participant response to the daily questionnaires – the most intensive aspect of the mCare application – was statistically associated with higher ratings of usability. A previous analysis of mCare found that patient engagement was high (participants responded to over 60% of the daily questionnaires) throughout the 36 weeks of the intervention as well.1 The present analysis, however, cannot clarify how much usability drives usage and how much usage drives perceptions of usability.

Moreover, this examination showed that ratings of usability were lower among participants who used an iPhone. Perceptions of usability according to phone type is important because the study was designed on a bring-your-own-device (BYOD) model, which is consistent with what is likely to happen when health care providers offer patients mobile applications such as mCare. The study team had to make mCare compatible with over 400 different phone models and available on all four major United States (US) cellular service providers in 2008. This meant designing mCare with the limitations of certain phone makes and models in mind, and because iPhone historically led the trend toward mobile applications and is known for its attention to user experience, it is not surprising that mCare did not meet their expectations.

Usability ratings were also lower among participants who used AT&T as their carrier (though not statistically significant). Although AT&T is the second largest United States network, at the time of the RCT, coverage maps from OpenSignal (http://opensignal.com/) show that the density of its tower locations is less than that of the other networks in the areas where the study participants were located, and its 3G download speed was slower than that of its competitors (e.g., 2.4 Mbps versus 4.7 Mbps for T-Mobile).18 This could have meant more difficulty for the user to sync with mCare to obtain the information being sent to them, particularly the links to the questionnaires. As of 2017, however, the situation is different, with convergence occurring in the user interface and functionality of mobile phones, despite brand, as well substantial growth in cellular coverage and download speeds.

Usability ratings were also lower among participants who lived alone or in a setting other than with a spouse/partner. A possible explanation could be the interaction of age and living arrangement. That is, although there were not statistically different usability ratings across age groups, perhaps the intersection of age and living arrangement is important. Study participants who did not live with a spouse/partner tended to be younger in this study (i.e., 42.9% of the participants who had ‘other’ living arrangement were in their 20s, compared with 0% of participants who lived alone and 19.0% of participants who lived with a spouse/partner), and study participants who lived alone tended to be older (40.0% of the participants who lived alone were older than 50 years, compared with 19.0% who lived with a spouse/partner and 28.6% who lived in ‘other’ arrangements). Research suggests the age groups differ in terms of their expectations of mobile phones and applications, with younger cohorts tending to have higher expectations.19,20 Alternatively, it might have been the case that not having a significant other to assist with mCare affected perceptions of usability in this cohort of injured Service Members. The study design cannot address these questions directly because the number of participants in sub-groups is too small, so they are opportunities for future research.

The results showed that usability scores were lowest for participants who had a behavioral health problem. Behavioral health problems included anxiety and depression. A literature review spanning three decades concluded that patients with depression and/or anxiety are less likely to be compliant and therefore have poorer outcomes.21 Although the differences are not statistically significant, the variation in the usability scores could be the effect of patients with behavioral health problems seeing overall poor outcomes attributing these outcomes to the mCare system. By contrast, the results for participants with mTBI and/or PTS are consistent with a previous study showing that people who have had a trauma are receptive to the use of mobile tools for helping to track symptoms.22

A limitation of this study is that 43.2 percent of the participants who received mCare completed the SUS at the time of their exit from the study. Participants in the standard care management group had a similar rate of missing for study questionnaires, which suggests that dissatisfaction with mCare was not necessarily the reason for non-response on the SUS. The analysis attempted to account for non-response on the SUS by testing for differences in background characteristics between those who completed the SUS versus those who did not, and then looking for associations between salient background characteristics and usability. The analysis found that those who completed the SUS were older and tended to be living with a spouse/partner. As noted above, living arrangement was associated with usability ratings as well. All other background characteristics were similar between those who completed the SUS and those who did not, suggesting that the observations about usability are generalizable to the rest of the study sample.

When the mCare study was launched, data plans were not as available and inexpensive as they are in 2017. On one hand, the cost of using mCare was potentially a concern among Service Members with limited economic resources, had limited data plans and/or could not afford overages, and not including these Service Members could have resulted in bias. On the other hand, offering high stipends can unintentionally result in a different kind of bias and also be coercive. The study tried to balance these issues by offsetting the costs of mCare for the participant by providing participants with a subsidy for their cellular service plans in the amount of $50 a month for unlimited data and voice, which was in alignment with the cost of that level of service on all service providers. The current cellular service market allows for much higher levels of cellular usage and smart phone adoption than at this study was conducted.

Conclusion

This evaluation of the mCare application for injured Service Members rehabilitating in their communities found it to be usable and easy to learn. Further, relative to the large database of SUS scores for mobile tools that was used to develop the letter grades scheme for interpreting individual scores, mCare tends to score high marks. Type of phone, living arrangement, and amount of usage of the application relate to usability scores. Although not statistically significant, usability was rated lower by people with behavioral health problems, those who were older, and/or had AT&T as their carrier. These things should be considered in determining patients who would benefit from using mCare in the future.

Acknowledgements

This study was funded by the Office of Force Health Protection, and managed by the Mobile Health Innovation Center (MHIC), an intramural laboratory of the Telemedicine and Advanced Technology Research Center (TATRC), United States Army Medical Research and Material Command (USAMRMC).

The authors would like to thank the following individuals for their contributions towards conducting the mCare clinical research study: Mike Bairas, Joyce Bennett, Charles Blair, Mabel Cooper, Eric Cutlip, COL (Ret) Karl Friedl, Ryan Fung, Lois Goldstein, Cindy Gilley, Willie Ho, Enouch Hui, Kurt Huttar, Jonathan Kean , Marsha Ma, Amanda Martin, Mahvish Malik, COL (Ret) Eileen McGonagle, COL (Ret) Fran McVeigh, COL (Ret) Ron Poropatich, April Pradier, Mary Radomski Celicia Thomas, COL (Ret) Johnnie Tillman, Matthew White, Rick Wise, Ping Xiang, Sarmid Youhanna, and Edmound Yu. Additionally, the authors would like to express their gratitude to the staff at the Community Based Warrior in Transition Units (CBWTUs) in Alabama, Florida, Illinois and Virginia where the study took place.

The views and opinions expressed in this articles are those of the authors and do not reflect official policy or position of the Department of the Army, Department of Defense or the U.S. Government.

Declaration of Competing Interests

All authors have completed the Unified Competing Interest form at www.icmje.org/coi_disclosure.pdf (available on request from the corresponding author) and declare: HP, MC, LG, JT and SF had financial support from Geneva Foundation (recipient of a a grant from the Office of Force Health Protection, and managed by the Mobile Health Innovation Center, an intramural laboratory of the Telemedicine and Advanced Technology Research Center, United States Army Medical Research and Material Command) for the submitted work; no financial relationships with any organisations that might have an interest in the submitted work in the previous 3 years; no other relationships or activities that could appear to have influenced the submitted work.

References

1. Pavliscsak H, Little J, Poropatich R, et al. Assessment of Patient Engagement with a Mobile Application among Service Members in Transition. J Am Med Inform Assoc 2016;23:110–8. ![]()

2. Schnall R, Rojas M, Bakken S, et al. A user-centered model for designing consumer mobile health applications (apps). J Biomed Inform 2016 Feb 20. pii: S1532-0464(16)00024-1. doi: 10.1016/j.jbi.2016.02.002. [Epub ahead of print]. ![]()

3. Wicklund ME, Wilcox SB. Designing Usability into Medical Products. Boca Raton, FL: CRC Press 2005. ![]()

4. Fonda SJ, Paulsen CA, Perkins J, et al. Usability test of an Internet-based informatics tool for diabetes care providers: the Comprehensive Diabetes Management Program. Diabetes Technol Ther 2008;10:16–24. ![]()

5. Brooke J. SUS: a “quick and dirty” usability scale. In: PW Jordan, B Thomas, BA Weerdmeester, AL McClelland, eds. Usability Evaluation in Industry. London: Taylor and Francis, 1996: 189–194.

6. Randell R, Backhouse MR, Nelson EA. Videoconferencing for site initiations in clinical studies: Mixed methods evaluation of usability, acceptability, and impact on recruitment. Inform Health Soc Care 2015;22:1–11.

7. Sousa VE, Lopes MV, Ferreira GL, et al. The construction and evaluation of new educational software for nursing diagnoses: a randomized controlled trial. Nurse Educ Today 2016;36:221–9. ![]()

8. Starling R, Nodulman JA, Kong AS, et al. Usability testing of an HPV information website for parents and adolescents. Online J Commun Media Technol 2015;5:184–203.

9. Kalz M, Lenssen N, Felzen M, et al. Smartphone apps for cardiopulmonary resuscitation training and real incident support: a mixed-methods evaluation study. J Med Internet Res 2014;19:3. ![]()

10. Sauro J. Measuring Usability with the System Usability Scale (SUS). February 2, 2011. http://www.measuringu.com/sus.php. Accessed March 15, 2016.

11. Lewis JR, Sauro J. The factor structure of the system usability scale. International conference (HCII 2009), San Diego, CA, USA. ![]()

12. Borsci S, Fedrerici S, Lauriola M. On the dimensionality of the System Usability Scale: a test of alternative measurement models. Cognitive Processing 2009;10:193–197. ![]()

13. Bangor A, Kortum PT, Miller JT. Determining what individual SUS scores mean: adding an adjective rating scale. J Usability Stud 2009;4:114–123.

14. Sawesi S, Rashrash M, Phalakornkule K, et al. The impact of information technology on patient engagement and health behavior change: a systematic review of the literature. JMIR Med Inform 2016;41:e1. ![]()

15. Thakkar J, Kurup R, Laba TL, et al. Mobile telephone text messaging for medication adherence in chronic disease: a meta-analysis. JAMA Intern Med 2016 Feb 1. doi: 10.1001/jamainternmed.2015.7667. [Epub ahead of print] ![]()

16. Pfaeffli Dale L, Dobson R, Whittaker R, et al. The effectiveness of mobile-health behaviour change interventions for cardiovascular disease self-management: A systematic review. Eur J Prev Cardiol 2015 Oct 21. pii: 2047487315613462. [Epub ahead of print]

17. Poropatich RK, Pavliscsak HH, Tong JC, et al. mCare: using secure mobile technology to support soldier reintegration and rehabilitation. Telemed J E Health 2014;20:563–9. ![]()

18. OpenSignal.com. http://opensignal.com/network-coverage-maps/; Accessed 25February2016.

19. Archana Kumar, Heejin Lim. Age differences in mobile service perceptions: comparison of Generation Y and baby boomers. Journal of Services Marketing 2008;22:568–577. ![]()

20. Mark MH, Goode FD, Luiz M, et al. Determining customer satisfaction from mobile phones: a neural network approach. Journal of Marketing Management 2005;21:755–778. ![]()

21. DiMatteo MR1, Lepper HS, Croghan TW. Depression is a risk factor for noncompliance with medical treatment: meta-analysis of the effects of anxiety and depression on patient adherence. Arch Intern Med 2000;160:2101–7. ![]()

22. Price M, Sawyer T, Harris M, et al. Usability evaluation of a mobile monitoring system to assess symptoms after a traumatic injury: a mixed-methods study. JMIR Ment Health 2016;3:e3. ![]()